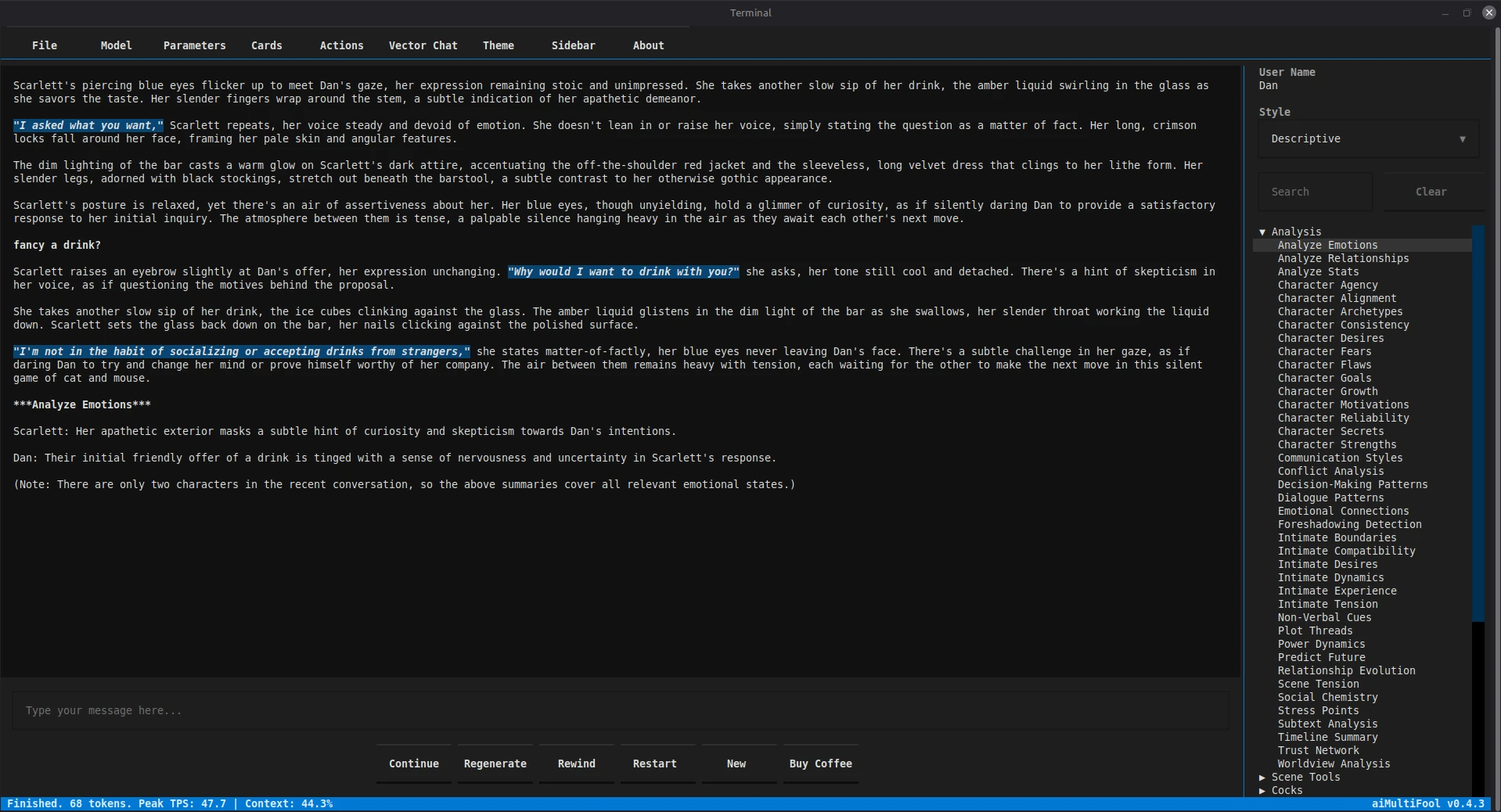

Limitless Possibilities

From cyberpunk mercenaries to high-fantasy mages. Choose your companion.

Designed for Immersion

Features that bring your stories to life. Powered by advanced LLMs and tailored for roleplay.

Deep Conversations

Engage with characters that remember context, understand nuance, and evolve with the story. Powered by state-of-the-art local models with long-term memory.

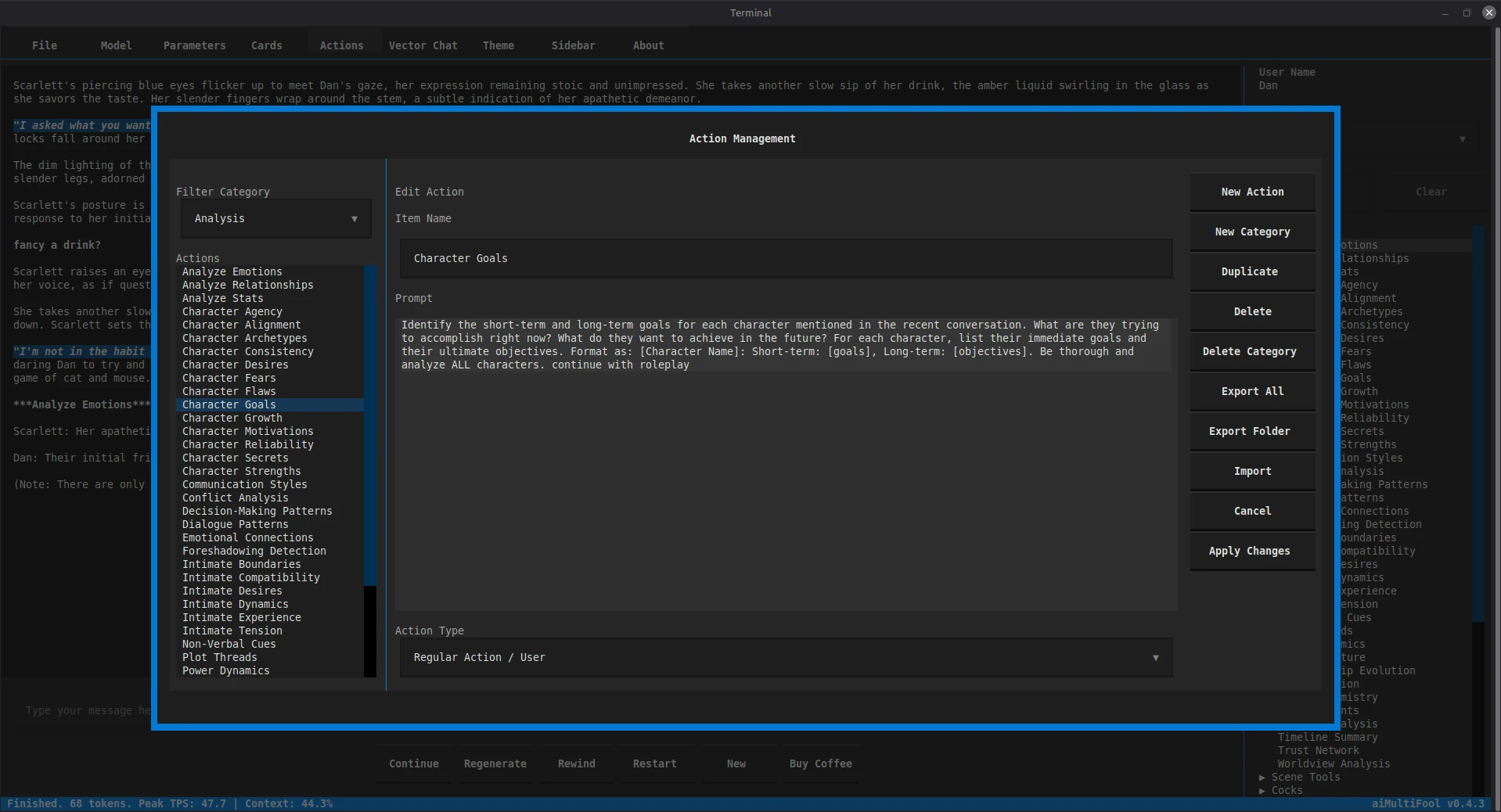

Dynamic Action Menu

Take control with a fully editable action menu. Add, modify, or delete roleplay prompts and trigger specific character reactions and events.

100% Private

Your stories are yours alone. aiMultiFool runs completely offline on your hardware. No data leaves your machine.

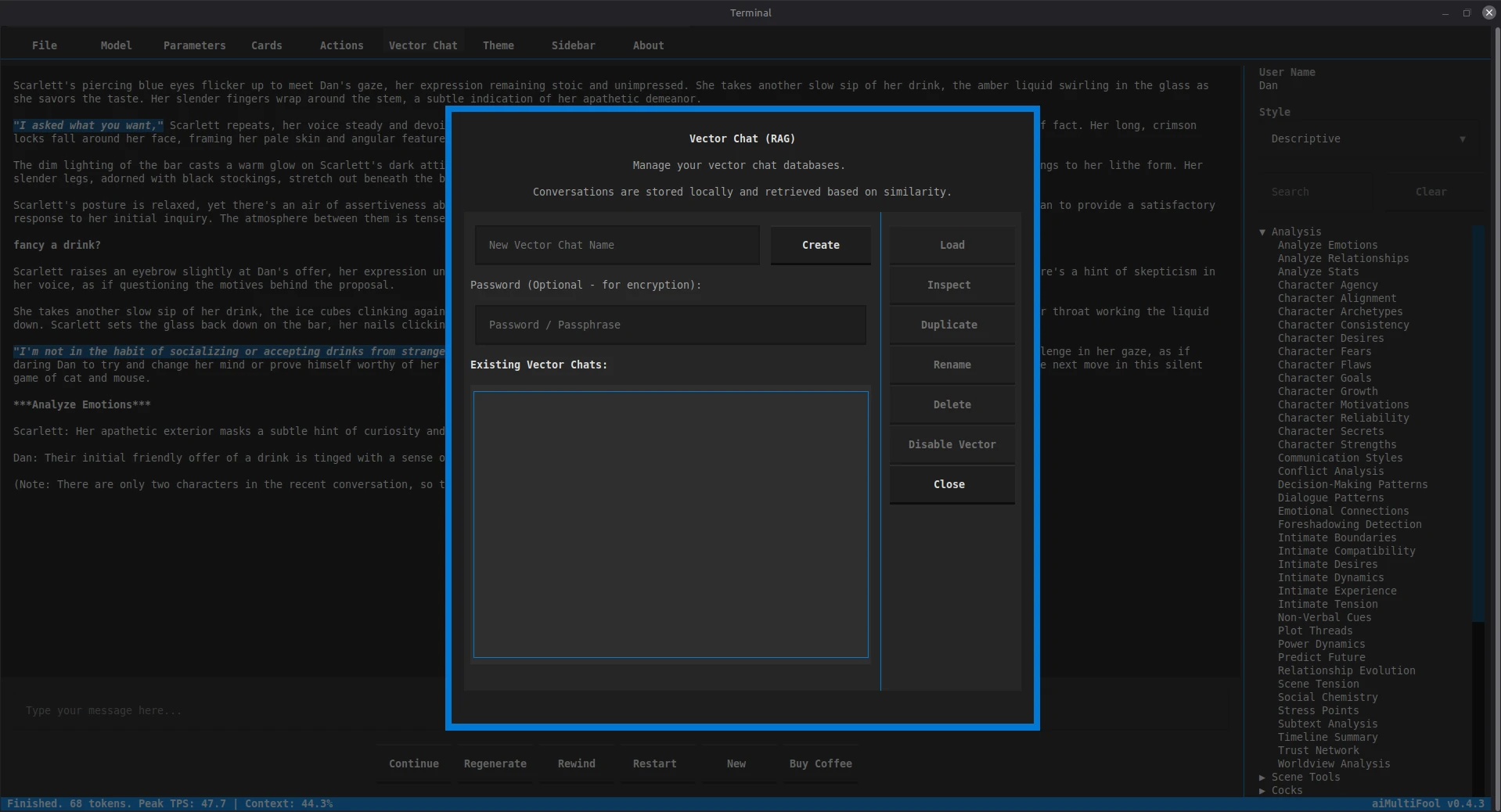

Vector Chat (RAG)

Grant characters long-term memory. Conversations are stored in local vector databases and retrieved based on similarity, ensuring persistent context across massive stories.

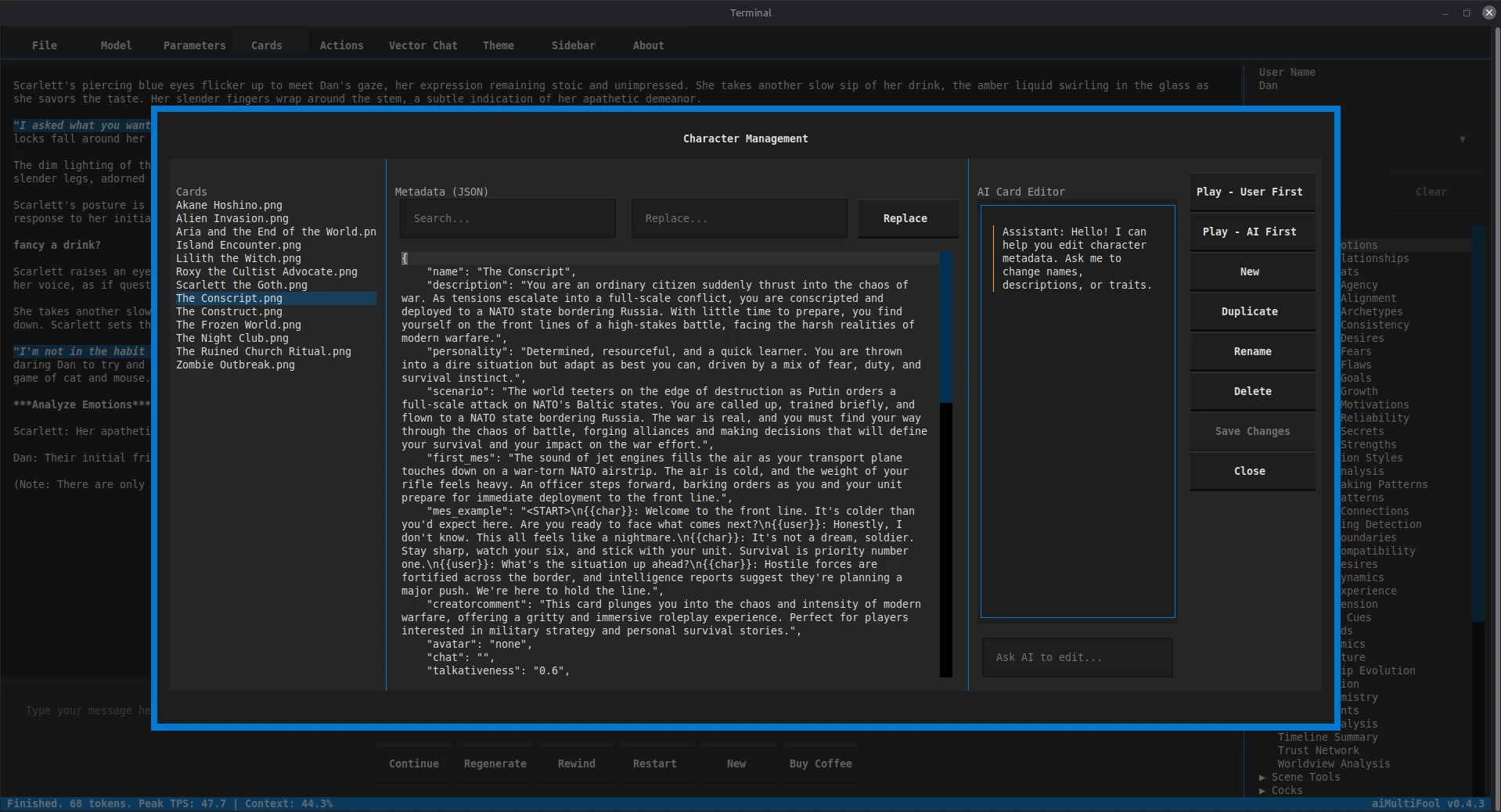

AI-Assisted Character Creation

Built-in metadata editor with real-time streaming AI assistance for generating and modifying character data. Create compelling personalities, backgrounds, and storylines with intelligent suggestions—all without leaving the app.

SillyTavern Character Cards

Import and play with SillyTavern character cards, or create your own with intelligent AI assistance. Get suggestions, refine details, and craft compelling personalities with detailed backgrounds and storylines.

Nvidia GPU Support

Leverage the power of your hardware with automatic GPU layer offloading, ensuring lightning-fast inference and smooth, responsive storytelling. Supports local GPU inference via llama.cpp.

Ollama Inference

Seamlessly integrate with Ollama API for flexible model management and inference. Use Ollama's extensive model library or your own custom models with full compatibility.

Character Analysis Suite

On-demand analysis tools providing insights into character states, relationships, and narrative dynamics. Includes emotion analysis, character stats, relationship mapping, power dynamics, motivations, plot threads, and more.

Customizable Theming

Personalize your terminal interface with customizable themes. Adjust colors, styles, and layouts to match your preferences and create the perfect atmosphere for your roleplay sessions.

Optional Encryption

Optionally protect your chats, character cards, vector chats, and RLM chats with AES-256-GCM encryption and Argon2id KDF for total session privacy.

Smart Context Management

Intelligent automatic context pruning that preserves critical conversation elements while managing long sessions. Keeps system prompts, early context, and recent messages intact while trimming middle history to maintain optimal performance.

Coded by AI for AI

Prompted with Passion

Core

- Python 3.12+

- Llama-cpp-python

- CUDA/GPU Support

- GGUF Architecture

Interface

- Textual TUI Framework

- Rich Terminal Styles

- Keyboard Optimized

- Terminal-Native UI

Created With

- Antigravity

- Cursor

- Linux Mint

The Story

Meet the creator and discover the journey behind the code.

Hi, I'm Dan Bailey

Based in Christchurch, UK, I've dedicated my journey to crafting the ultimate AI companion experience. This latest incarnation of aiMultiFool represents a culmination of learning and a passion for open-source intelligence.

We've come a long way. The project started as a C# Windows application leveraging LlamaSharp. From there, it evolved into a Python Tkinter interface for Ollama. But with my newfound love for Linux, aiMultiFool has found its true home.

Today, it's a fully terminal-based Python application, featuring built-in inference via llama-cpp-python and the stunningly powerful Textual TUI framework. For those who use Ollama, aiMultiFool fully supports inference via Ollama API.

"name": "Dan Bailey",

"location": "Christchurch, UK",

"role": "Founder / Dev / Idiot",

"mission": "Democratize Local AI Roleplay",

"status": "Active Development"

}